Next, we will explain the "Technologies Involved to in Pass/Fail Classification" (technologies associated with the 'brain' in visual determination).

1. Two Steps in Determination

Determining pass/fail through image processing involves two major steps.

The first step is to "Recognizing Areas to Be Determined in the Image," and the second step is to "Establishing Pass/Fail Criteria for the Extracted Areas".

(1) About the Step of "Recognizing Areas to Be Determined in the Image"

The ultimate goal of inspection using image processing is to determine pass or fail, but it is necessary to define "what to focus on" as the criteria for this determination. The process for doing this is the step of "Recognizing Areas to Be Determined in the Image".

The extraction of areas to be determined using image processing can be broadly divided into two methods: using AI (here, we refer to the inspection mode that uses Deep Learning as "AI") and the method called rule-based.

The biggest difference between AI and rule-based methods is whether the adjustment to find the criteria for extracting inspection areas is left to the software using Deep Learning ("AI") or whether humans select the optimal determination method according to the situation ("rule-based").

(2) About the Step of "Establishing Pass/Fail Criteria for the Extracted Areas"

Pass/fail criteria are set for the extracted content defined in the step of "Recognizing Areas to Be Determined in the Image." The criteria include extracted area, quantity, and the content of identified text, among others.

For instance, if the target is extracted using AI, the pass/fail determination is made based on the "number of detected targets." If the rule-based method is chosen, such as comparing with the original image and extracting the differences, the pass/fail determination is made based on the "total extracted area of the differences."

2. The Difference Between AI and Rule-Based Approach

Both determination techniques have their own characteristics and considerations, and it is necessary to understand these in order to set the appropriate image processing conditions.

| Step | AI | Rule-Based | |

| Extraction of Discrimination Areas | Features | ・One can extract ambiguous areas where defining them unambiguously is challenging. ・In cases of uneven imaging environments, extraction of discernible areas is still achievable. ・Human intervention is only necessary for preparing training data and instructing extraction areas (annotation), eliminating the need for complicated tool selection (some algorithms even negate the need for annotation). | ・The extraction rules for determination areas are clear. ・Adjustments to the extraction areas can be made arbitrarily by changing the specified values. ・Setting up simple extraction areas can be done quickly. |

| Considera- tion | ・The logic behind the extraction is unclear. ・Dependent on annotation and learning, making adjustment to extraction areas challenging. ・Requires effort in preparing images for learning. | ・Defining complex extraction conditions is challenging. ・Image uniformity (no shadows, uniform color) and consistency (no differences due to product variations) are required. ・A certain level of knowledge is necessary to determine which extraction can be done in which mode. | |

| Method for Determining Criteria | Features | ・Determination based on the detected quantity or textual content. ・Determination based on scores or matching indicators. | ・Selection can be made based on the number of pixels between edges, the area of the extraction region, the number of items, the content of characters, etc. |

| Considera- tion | ・Determination based on quantitative measures such as area or number of pixels is not possible. | ・A certain level of knowledge is required to determine which criteria to use in each mode of determination. | |

3. Various AI Algorithms

In essence, "AI-based determination" can be broadly categorized into several types based on the type of training data and the method of determinating areas extraction.

(1)Difference in Learning Data: "Unsupervised Learning" for memorizing good products and "Supervised Learning" for memorizing defective products

In AI, there are "Unsupervised Learning," which learns images of good products and identifies differing parts as defective, and "Supervised Learning," which specifies defective parts in images and learns them.

"Unsupervised Learning" does not require preparing defective products, and even if it is unclear what defects may occur, it can detect differences as defects based on features learned as good products.

Therefore, it is often considered as the initial step of determination by AI due to its ease of preparation and the lack of need to individually set defective products.

On the other hand, in cases where defects are overlooked or good products are mistakenly identified as defects in "Unsupervised Learning" algorithms, using "Supervised Learning" algorithms may improve inspection accuracy.

(2) Differences in Extracted Areas: "Image Classification," "Object Detection," and "Segmentation"

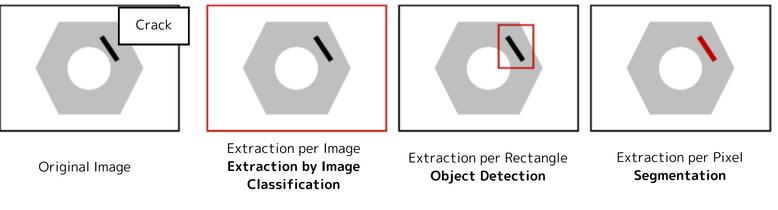

Differences in Results Obtained by Algorithms: "Image Classification," "Object Detection," and "Segmentation"

Depending on the algorithm used, the results obtained for determination vary as follows:

Image Classification: Determines what type of image the entire image represents (e.g., categorizing images as good or containing cracks).

Object Detection: Identifies the location, quantity, and type of objects within an image in rectangular units (e.g., extracting rectangles indicating where cracks are in an image).

Segmentation: Identifies the location, quantity, type, and size of objects within an image in pixel units (e.g., extracting information on where cracks are, how many there are, and their size in pixels).

Many image analysis software tools for defect detection adopt either object detection (rectangular determination) or segmentation (pixel-level determination) because they want to verify the number and size of the objects to be extracted. Some software also allows determination based on the number of extracted pixels, especially when combined with rule-based algorithms, by extracting at the pixel level.

Moreover, these algorithms significantly impact how extraction areas are indicated (referred to as annotation in AI terminology). In object detection, extraction areas are enclosed in rectangles, whereas in segmentation, extraction areas need to be specified at the pixel level.Using segmentation allows for size determination based on the number of pixels, which is one advantage.

On the other hand, object detection, which completes annotation by simply enclosing areas in rectangles, can reduce the annotation workload compared to segmentation.

4. Approach to Learning with AI

When conducting determination using image processing with AI, conditions for determination are set through the following steps.

Data Collection: Gather image data to be used as determination criteria.

(Annotation): When using algorithms for supervised learning, indicate the extraction areas.

Training: Use the collected data to create an algorithm (referred to as a model) using deep learning to extract determination areas.

Evaluation and Tuning: Evaluate the model using both the training images and images not used in training to confirm if the optimal determination results are achieved. Often, initial misclassifications occur, necessitating re-collection of data or re-annotation.

5. Summary

With improvements in computer performance and advancements in technology, the barriers to implementing image analysis technology in the visual inspection process are decreasing.

On the other hand, it is necessary to carefully determine whether image analysis technology, touted as "highly accurate" and "easy to operate," truly offers the optimal inspection accuracy for your products and can be effectively operated in-house.